It's 2075 and....

/A playbook on the impact of AI on society and work as we look at it from the first steps of the AI revolution

It’s 2075 and…

A playbook on AI’s societal impact and how we shape it

It’s 2075 and…

You make tea while the kettle hums on recycled heat from the neighbourhood compute hub. Your personal “pair” has already cleared the bureaucratic undergrowth: tax return filed, travel rebooked after the rail strike, care rota swapped with your neighbour so you can catch your daughter’s school play. You still “work,” though the word is gentler now. Three days a week you do complex case work for an insurer’s risk team, mostly judgement calls the agents are not allowed to make. One day you mentor apprentices who are learning to supervise swarms of AI. Fridays are civic: you help your borough test an algorithm for benefit appeals, alongside a citizens’ data trust that actually owns the training records.

If that sounds optimistic, it is meant to be, though not fanciful. It takes today’s evidence and pushes it forward fifty years. Think of this as a playbook, not sci-fi.

What really changes in a society after mass AI adoption

1) Productivity lifts are real, but uneven, and governance determines who benefits. Early deployments of AI “copilots” raised output and quality in information work. In customer service, for example, a large field study found substantial gains, especially for less experienced staff, when given an AI assistant trained on expert behaviour. Those effects compound when agents become more autonomous and when processes are redesigned around them. The uneven bit matters: exposure is highest for white-collar tasks, which is why policy and firm design decide whether this is a tide that lifts most boats or a narrow yacht club. (NBER)

2) The workweek ratchets down, but through redesign not decree. The path is already visible in high-quality trials. The UK’s four-day week pilots showed stable or improved performance, lower burnout and attrition, and most firms kept the policy. Iceland’s multi-year experiments found similar outcomes and normalised shorter hours across the economy. The lesson for 2075 is simple: combine automation with work-process surgery and you can trade hours for wellbeing without sinking output. (UCD Research Repository, digit-research.org, Axios)

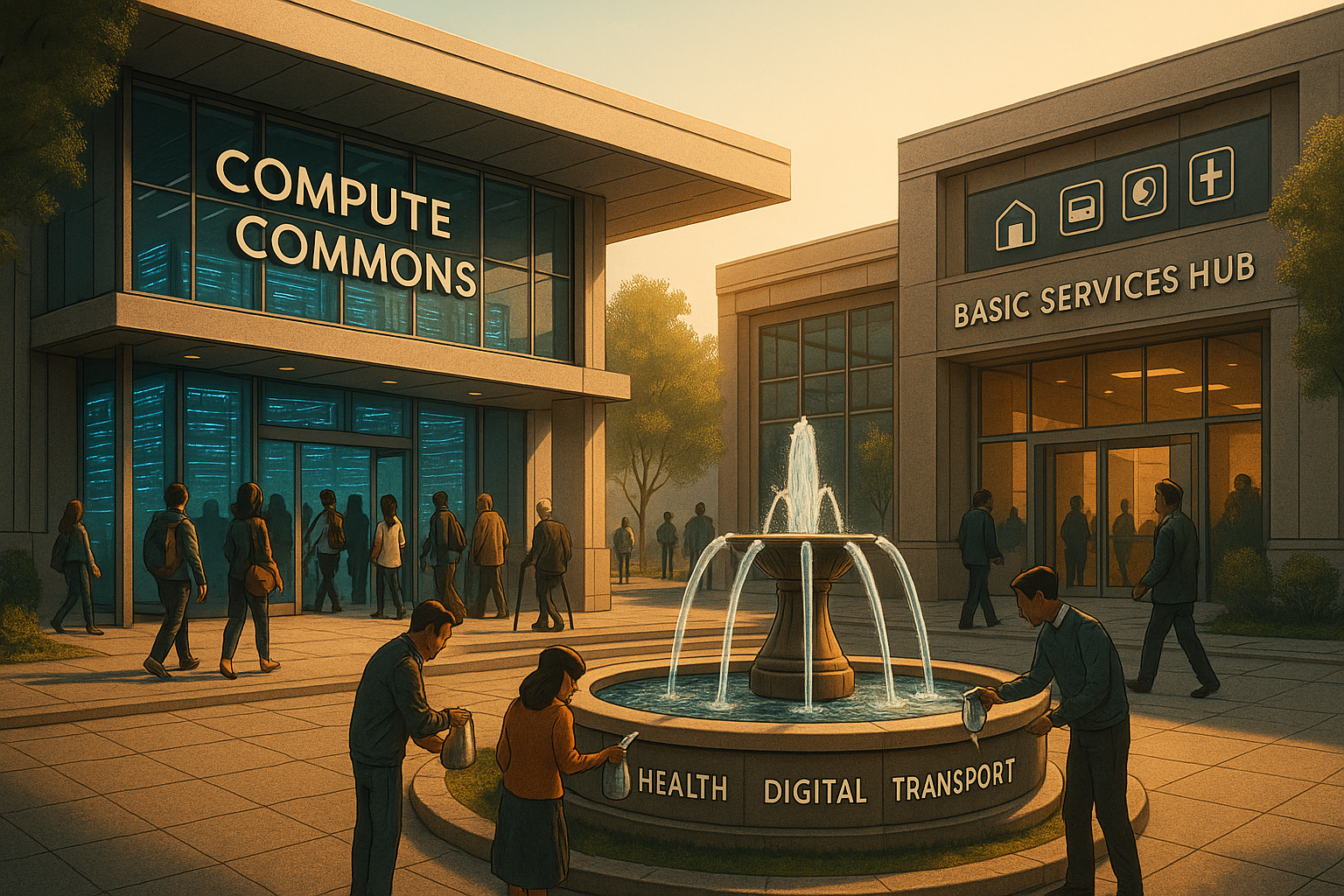

3) Safety nets morph into “commons,” not just cheques. Some countries tested basic income; the best evidence so far says it improves wellbeing and lowers stress, but it is not a magic bullet for jobs. A parallel movement built Universal Basic Services: guaranteeing essentials like transport, childcare, digital access and housing at service cost, which stretches every earned pound farther. By 2075 the blend looks more like UBS plus targeted income floors than a single universal cash payment. (University College London)

4) Data and compute become civic infrastructure. Today’s EU AI Act and global safety compacts create a regulatory scaffold. The bigger structural shift is treating compute as a governable lever: visible, quantifiable and chokepointed, which makes it practical for safety audits and access policy. The UK’s public AI supercomputers point to a model where nations invest in shared capacity that researchers, SMEs and public services can actually use. By 2075, most advanced economies will run public-option compute and evidence-based access rules, much like roads and spectrum today. (Digital Strategy, cdn.governance.ai, GOV.UK)

5) Demography and climate keep us honest. Ageing, chronic disease and adaptation to climate stress guarantee vast demand for human-centred work. International organisations already project multi-million shortfalls in health and care roles. Even with automation, societies that thrive in 2075 will have invested in care capacity, task redesign and mobility pathways into those jobs. (WHO Apps, International Labour Organization)

So how do people earn a living?

The 2075 income stack is plural, not binary.

Wages for “human-plus” roles. These are judgement, exception handling, safety oversight, relationship work and creative synthesis. AI lifts the floor and the ceiling, but leaves a core of high-leverage human tasks. Autor’s and Acemoglu’s lines are instructive: design AI to create new tasks and complements, and avoid “so-so automation” that cuts pay without meaningful productivity. (World Economic Forum, Oxford Academic)

Dividends from shared assets. Alaska’s oil fund was the prototype for resource dividends; future equivalents include compute or data dividends paid to residents via data trusts or national compute funds. They do not replace wages; they stabilise households and local demand. Early “data dignity” work and UK data-trust pilots give the legal plumbing for this. (The ODI)

Civic stipends and service credits. If we collectively demand auditability, care and stewardship, we will pay for it. Expect structured civic work to be normalised and paid, not just “volunteered.” The mechanism sits neatly inside a UBS logic. (LSE Public Policy Review)

Micro-enterprise with agents. Individuals run swarms of agents to operate shops, courses, niche services and media channels. The agent market is real, but noisy and sometimes over-hyped; design and trust frameworks mature it into a reliable income layer by 2075. (CRN, IT Pro)

Who pays for what? Taxes tilt toward outcomes that scale with automation: consumption and land, yes, but also usage-based levies on frontier compute paired with public returns from national compute facilities. The old “robot tax” idea keeps resurfacing; whatever you call it, the gist is to recycle some productivity into broad prosperity without throttling useful innovation. (cdn.governance.ai)

What jobs exist?

By 2075, most occupations are bundles of tasks, and the bundles have been rebundled.

Explain, oversee, and certify. AI safety officers, algorithm auditors, compute stewards, incident responders and “model risk” professionals. The EU Act’s risk tiers and audit duties foreshadow entire career ladders. (European Parliament)

Edge-case specialists. Insurance, law, medicine and public services still need human judgement on atypical, ethically loaded or socially sensitive cases. The early productivity studies showed the technology raises the baseline; these people hold the line. (NBER)

Care and community. Health professionals, allied health, social care coordinators, neurodiversity coaches, ageing-in-place retrofitting teams, climate resilience crews. Demand pressure here is demographic, not faddish. (WHO Apps)

Systems renovators. The green rebuild of housing, grids and transport creates skilled trades that work alongside robotics and agents. Procurement and permitting are retooled with AI, but boots still meet ground.

Education as an industry of transitions. Lifelong learning stops being a slogan. The UK’s “Lifelong Learning Entitlement” points at a funding chassis that, once coupled to AI tutors and employer academies, becomes the real on-ramp for mid-career mobility. (HEPI)

What skills will pay the bills?

Employers’ lists have been remarkably consistent: analytical thinking, creative thinking, resilience and social influence top the charts, with digital fundamentals assumed. You also need data stewardship, prompt-to-process design, and the discipline of running agents safely. These are not vibes; they show up in employer surveys and international skills outlooks. (World Economic Forum Reports, OECD)

The structure of society

The median society in 2075 looks less like a “post-work” utopia and more like a re-balanced social contract.

Time: standard weeks in the low-30s for full-timers, made possible by process redesign and automation, not by wishful thinking. (UCD Research Repository)

Commons: UBS plus targeted income floors, plus public compute and data stewardship. (University College London)

Governance: risk-tiered AI law, compute governance for visibility and enforcement, cross-border safety science via compacts like Bletchley. (Digital Strategy, cdn.governance.ai, GOV.UK)

Place: compute hubs are as normal as libraries. Waste-heat warming your cuppa is not a joke; next-gen systems already recycle heat and run on low-carbon power. (TechRadar)

Risks to manage

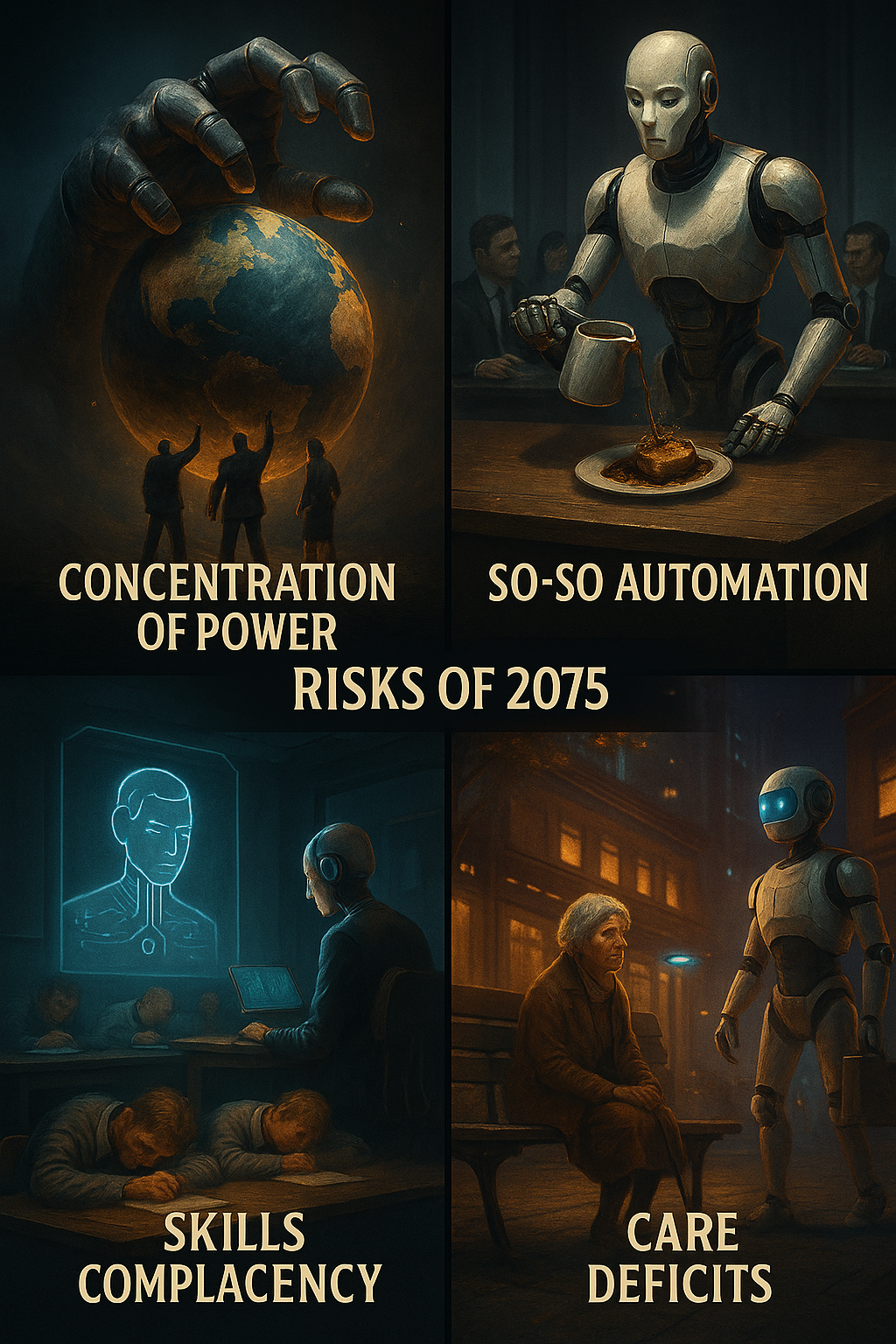

Concentration of power in models and compute can lock in inequality if left alone. Compute governance and competition policy are the antidotes. (governance.ai)

So-so automation hollows out wages without raising productivity. Tie incentives to complementarity and task creation. (Oxford Academic)

Skills complacency is fatal. Without funded pathways and time to learn, the gains pool at the top. (OECD)

Care deficits become the rate limiter on growth and social cohesion if we do not invest. (WHO Apps)

The 2075 Playbook: headline takeaways

Key observations

AI lifts productivity and quality when paired with workflow redesign; the biggest gains arrive in the hands of average workers, which narrows gaps if we let it. (NBER)

Shorter weeks are sustainable when you change how work is done, not just how long it lasts. (UCD Research Repository)

The durable social model is UBS + targeted income floors + public compute, not cash alone. (University College London, GOV.UK)

Compute and data will be governed like utilities, with safety, access and audit built in. (cdn.governance.ai)

Health, care and climate work anchor employment demand for decades. (WHO Apps)

Actions for governments

Build the commons: legislate and fund Universal Basic Services, including digital and transport; pair with a minimum income floor tested through pilots that actually publish results.

Public compute: scale national AI research resources with transparent access and safety audit obligations; publish a compute registry and safety reporting akin to aviation. (GOV.UK)

Guardrails that travel: implement risk-based AI law with cross-border evaluation partnerships; fund an independent safety science network. (Digital Strategy, GOV.UK)

Pay for care, at last: expand training, migration compacts and task redesign to close projected gaps. (WHO Apps)

Tax for the machine age: shift burden toward consumption, land and compute usage where appropriate, recycle proceeds into skills and services; evaluate robot-tax-style instruments pragmatically.

Actions for employers

Automate the dull, enrich the role: measure time returned and reinvest it in quality, creativity and customer resolution.

Design for complementarity: use AI to create new tasks and products, not just strip labour. Track wage and task mix as hard KPIs. (Oxford Academic)

Guarantee learning time: ring-fence paid hours for skills, tied to clear internal pathways.

Stand up a model-risk function: treat AI like finance treats risk models: inventory, monitor, stress-test, and explain under the EU Act mindset. (European Parliament)

Actions for individuals

Stack the durable skills: analytical and creative thinking, resilience, and social influence, plus data and agent-ops literacy. Keep an updated “task map” of your role and target the bits AI is worst at. (World Economic Forum Reports)

Own your data voice: join or start data-trust schemes in your sector; choose services that respect participatory data stewardship. (Ada Lovelace Institute)

Build a portfolio of work: mix wage roles, agent-run micro-work, and civic stipends so you are less exposed to any single shock.

Closing note

We (society) missed Keynes’s 15-hour week because we optimised for consumption, not time. We do not have to make that mistake twice. If we aim AI at better jobs, broader services and shared infrastructure, 2075 can look a lot like that morning in your kitchen. The tea still tastes like tea. The work still matters. The society around you works, for more people, more of the time. That is not utopia. That is a plan. (econ.yale.edu)

Selected sources behind this playbook: IMF on AI exposure; WEF Future of Jobs 2025; McKinsey on gen-AI productivity; Autor on task creation; Acemoglu on “so-so automation”; UK and Iceland four-day week evaluations; Finland basic income results; UCL’s Universal Basic Services; EU AI Act and Bletchley Declaration; compute governance research; WHO and ILO on care-economy demand; UK public compute strategy. (NBER, IMF, World Economic Forum, Oxford Academic, UCD Research Repository, Digital Strategy, GOV.UK, cdn.governance.ai, WHO Apps)