Loss of Smell in Coronavirus - The seduction of numbers

/Yesterday caught a brief moment of Professor Van-Tam at great pains to explain that loss of smell as a symptom made only a teeny-teeny-teeny-tiny difference to the number of those who could be predicted with #coronavirus.

Now, the thing is, studies have shown this sort of thing:

"For example, a British study released last week collected COVID-19 symptom data from patients through an online app. The data show that almost 60 percent of the 579 users who reported testing positive for the coronavirus said they’d lost their sense of smell and taste. But a significant portion of patients who tested negative for the virus—18 percent of 1,123 people—also reported olfactory and taste troubles."

At first glance this is very confusing - surely if 60% of coronavirus patients report loss of smell, it HAS to be a good predictor even if/especially since that number is much lower (18%) in general (for other conditions & non-conditions)?

Van-Tam seemed to be so adamant about the small predictive qualities of loss of smell, I figured I would think it through carefully and run some numbers.

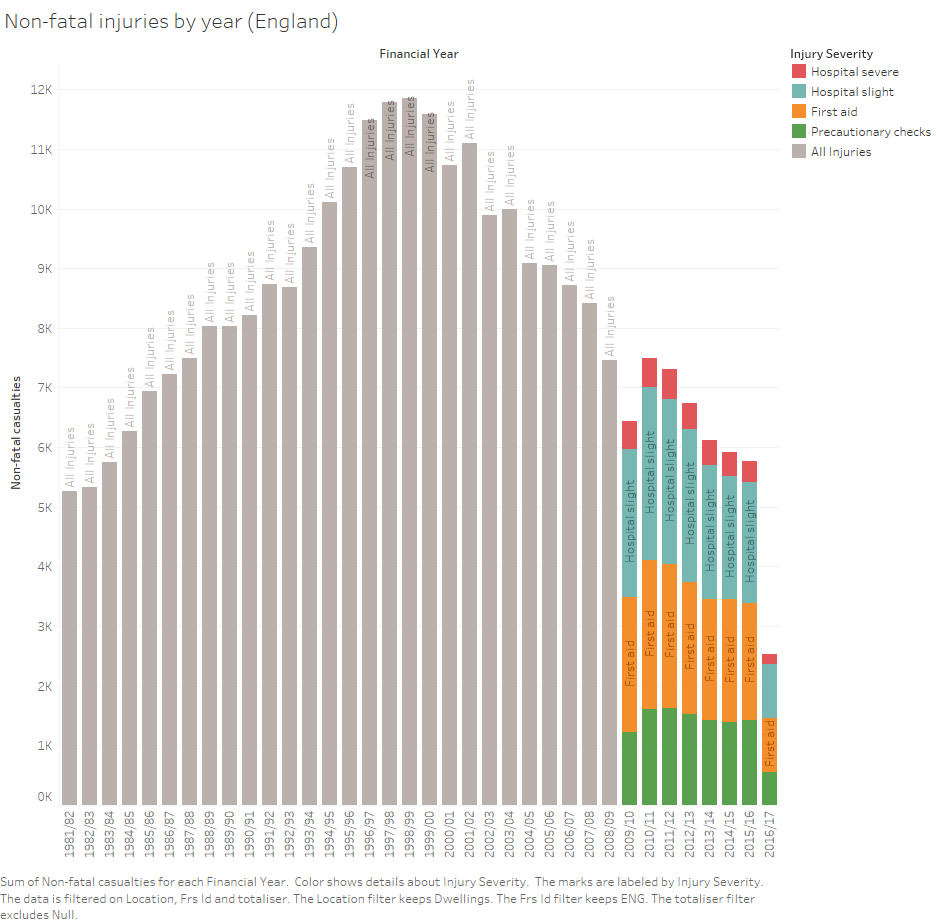

I decided to imagine that "itchiness" was a new observation and plugged in some numbers to calculate how diagnosis plays out. On the left is a very simple Excel spreadsheet which calculates how many people are in each group based on general percentages. I've used some representative percentages that are in the right ballpark to help make the thing (hopefully) more realistic.

It turns out that even if 60% of covid sufferers report itchiness, it is still a lousy predictor of them having the disease.

So what's going on here?

This is in the same realm as Simpson's paradox, which I discussed the other day:

In this case: A high percentage of small number (itchy with covid) can end up being much more diminutive than a small percentage of a high number (itchy without covid).

When the above observations are taken as individual groups, already KNOWING which group a person belongs to, it's certainly intuitive to draw the conclusion that you have a good predictor in the itchy-with-covid group.

But that's only AFTER the fact.

In reality, to start with, you don't have these groups, you are looking for a predictor in order to actually form them amongst a general population. And that is a different problem.

In total many, many more people who are itchy will actually belong to the itchy-without-covid group, simply because the proportion who do genuinely have covid is a much smaller part of the population. (At least for now).

In my demonstration model, if someone reports being itchy, they are 5.7 times more likely to have something else than #covid19 even though 60% of those who have #covid19 report being itchy!

Notes

It doesn't matter what value to start the population at, it all works out the same. So you can treat "population" as "the number of people who report itchiness that day, or week, or who have done so in the last month" etc.

quote source at: https://www.nationalgeographic.co.uk/science-and-technology/2020/04/lost-your-sense-of-smell-it-may-not-be-coronavirus